Are you curious about Artificial Intelligence (AI) but unsure where to start? Look no further! In this article, you will gain a comprehensive understanding of the basics of AI. From its fascinating history to key concepts such as machine learning, deep learning, and neural networks, this article has got you covered. Whether you are a beginner or looking to refresh your knowledge, this informative piece will equip you with the necessary fundamentals to navigate the world of Artificial Intelligence.

This image is property of blog.invgate.com.

History of Artificial Intelligence

Early Beginnings

The history of artificial intelligence dates back to the 1950s when pioneers in the field began exploring ways to create machines that could mimic human intelligence. The term “artificial intelligence” was coined in 1956 at a conference at Dartmouth College. Early efforts focused on developing logic-based systems that could solve complex problems. Researchers were optimistic about the potential of AI and believed that machines would soon be able to perform tasks that required human intelligence.

The AI Winter

However, the initial enthusiasm for AI was followed by a period known as the “AI Winter.” In the 1970s and 1980s, progress in AI research slowed down, and funding for AI projects decreased. The high expectations of AI had not been met, and the limitations of the existing techniques became apparent. This led to skepticism and a decline in interest in the field. Many people believed that AI was just a hype and that achieving true artificial intelligence was not possible.

Current Developments

In recent years, there has been a resurgence of interest in AI, driven by advancements in computing power and the availability of vast amounts of data. Breakthroughs in machine learning and deep learning have opened up new possibilities for AI applications. Companies like Google, Facebook, and Amazon are investing heavily in AI research and development. AI is now being integrated into a wide range of industries, from healthcare to finance, transportation to entertainment.

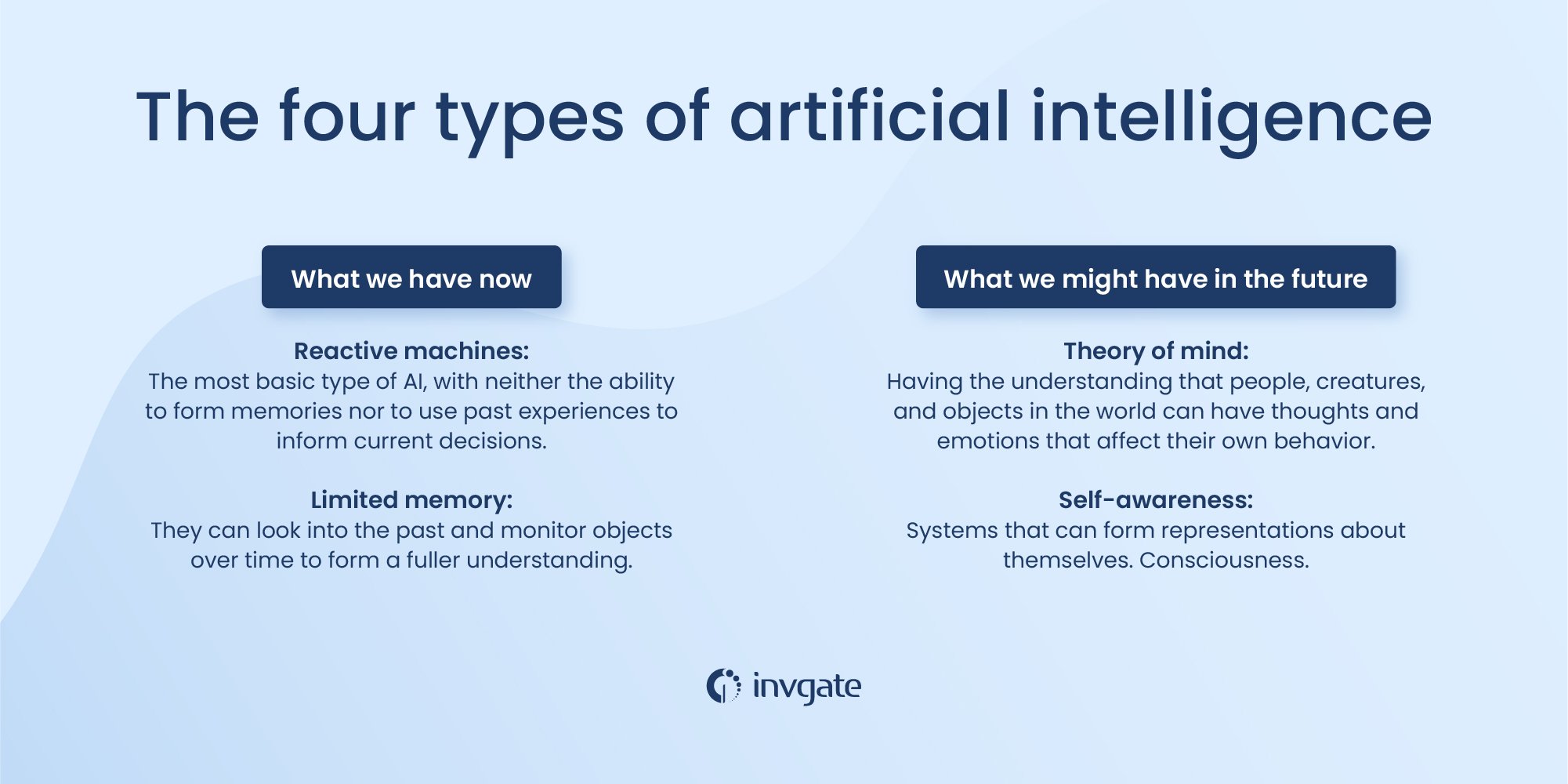

Types of Artificial Intelligence

Narrow AI

Narrow AI, also known as weak AI, refers to AI systems that are designed for specific tasks and have a narrow range of capabilities. These systems are designed to perform well in a specific domain, such as playing chess or diagnosing diseases. Narrow AI is the most common form of AI at present, and many applications we encounter in our daily lives, such as virtual assistants and recommendation systems, are examples of narrow AI.

General AI

General AI, also known as strong AI, is the concept of AI systems that possess the same level of intelligence as a human being. These systems would be capable of understanding and learning any intellectual task that a human can do. While the development of general AI remains a long-term goal for many researchers, it is still largely theoretical and has not yet been achieved.

Superintelligence

Superintelligence refers to an AI system that surpasses human intelligence and has the ability to outperform humans in virtually every task. This concept raises ethical concerns about the potential consequences of creating a superintelligent machine. While superintelligence is currently the subject of much speculation and debate, it is not yet a reality.

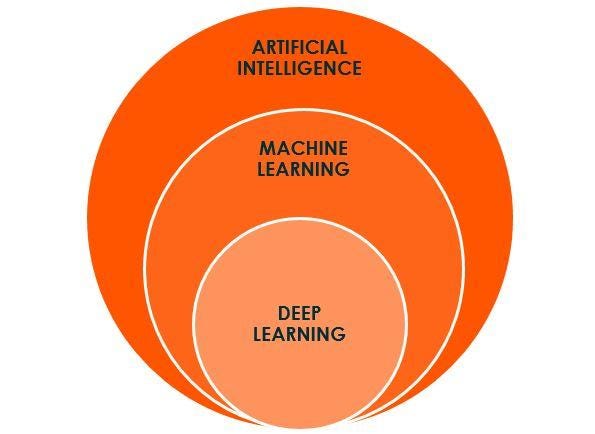

Machine Learning

Definition and Overview

Machine learning is a subset of AI that focuses on enabling computers to learn from data and make predictions or decisions without being explicitly programmed to do so. It involves the development of algorithms and statistical models that allow computers to automatically improve their performance on a specific task through experience. Machine learning is at the heart of many AI applications and is the driving force behind recent advancements in the field.

Supervised Learning

Supervised learning is a type of machine learning where the algorithm is trained on labeled data, meaning the data is accompanied by the correct answers. The algorithm learns from these labeled examples and can then make predictions or classifications on new, unseen data. This type of learning is commonly used in tasks such as image recognition, speech recognition, and spam filtering.

Unsupervised Learning

Unsupervised learning is a type of machine learning where the algorithm is trained on unlabeled data, meaning there are no predefined answers. The algorithm learns to find patterns, structures, or relationships in the data without any guidance. Unsupervised learning is useful for tasks such as clustering, anomaly detection, and recommendation systems.

Reinforcement Learning

Reinforcement learning is a type of machine learning where an agent learns to make decisions through trial and error. The agent interacts with an environment, receives feedback in the form of rewards or penalties, and learns to take actions that maximize the expected reward. Reinforcement learning has been successfully applied to tasks such as game playing, robotics, and autonomous vehicle control.

Deep Learning

Definition and Overview

Deep learning is a subfield of machine learning that focuses on training artificial neural networks with many layers, also known as deep neural networks. These networks are inspired by the structure and function of the human brain, with layers of interconnected artificial neurons that process and transmit information. Deep learning has revolutionized AI by enabling computers to learn and extract complex patterns from large amounts of data.

Neural Networks

Neural networks are the building blocks of deep learning. They are mathematical models that are designed to mimic the structure and function of biological neurons. A neural network consists of layers of interconnected artificial neurons, where each neuron receives inputs, performs computations, and passes the output to the next layer. Through the process of forward and backward propagation, neural networks can learn to recognize patterns, make predictions, or make decisions.

Convolutional Neural Networks

Convolutional neural networks (CNNs) are a type of neural network that are particularly well-suited for image and video processing tasks. They consist of convolutional layers that apply filters to input data, pooling layers that downsample the data, and fully connected layers that perform the final classification or regression. CNNs have achieved remarkable success in tasks such as image classification, object detection, and image segmentation.

Recurrent Neural Networks

Recurrent neural networks (RNNs) are a type of neural network that are designed for sequential data, such as time series or text. Unlike feedforward neural networks, which process data in a single pass, RNNs have connections between neurons that form directed cycles, allowing them to capture information from previous steps. RNNs are widely used in tasks such as natural language processing, speech recognition, and machine translation.

This image is property of miro.medium.com.

Neural Networks

Structure and Function

Neural networks are composed of layers of artificial neurons, each designed to simulate the behavior of a biological neuron. The structure of a neural network can vary depending on the task it is designed to solve, but the basic components remain the same. Input neurons receive input data, hidden neurons perform computations, and output neurons produce the final result. The neurons are connected by weights, which determine the strength of the connections between neurons.

Artificial Neurons

Artificial neurons, also known as perceptrons, are the fundamental units of a neural network. They receive input signals, apply a mathematical operation called activation function to the inputs, and produce an output. The output of a neuron is determined by the weighted sum of its inputs, where the weights determine the importance of each input. By adjusting the weights during training, neural networks can learn to make accurate predictions or decisions.

Activation Functions

Activation functions are mathematical functions that are applied to the inputs of a neuron to introduce non-linearity into the network. Non-linearity is crucial for neural networks to model complex relationships and capture patterns in data. Some commonly used activation functions include the sigmoid function, which maps inputs to values between 0 and 1, and the rectified linear unit (ReLU), which maps inputs to values greater than or equal to 0.

Training and Optimization

Training a neural network involves adjusting the weights of the connections between neurons to minimize the difference between the network’s predictions and the desired outputs. This is done through an iterative process called optimization, where an optimization algorithm is used to find the values of the weights that result in the best performance. The most widely used optimization algorithm is called stochastic gradient descent, which adjusts the weights based on the gradients of the network’s loss function with respect to the weights.

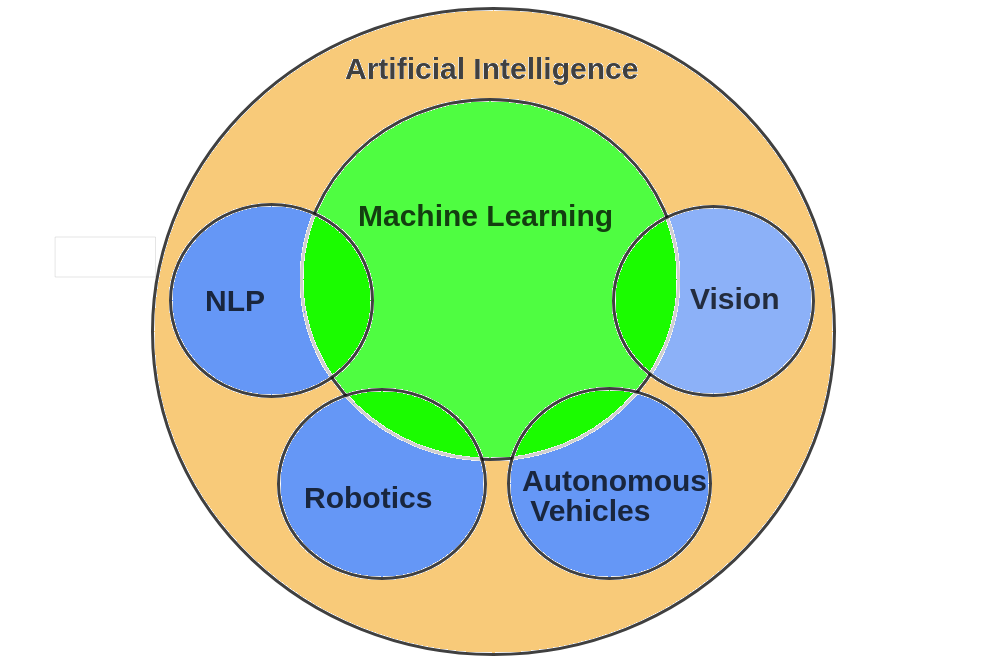

Natural Language Processing (NLP)

Overview of NLP

Natural Language Processing (NLP) is a branch of AI that focuses on enabling computers to understand, interpret, and generate human language. NLP involves a variety of tasks, such as text classification, entity recognition, sentiment analysis, machine translation, and question answering. NLP techniques are used in a wide range of applications, from virtual assistants like Siri and Alexa to language translation services like Google Translate.

Text Classification

Text classification is the task of assigning predefined categories or labels to a piece of text. It is widely used in applications such as spam filtering, sentiment analysis, and topic classification. Text classification algorithms typically use machine learning techniques to learn patterns in the text data and make predictions on new, unseen text.

Named Entity Recognition

Named Entity Recognition (NER) is the task of identifying and classifying named entities in text, such as names of people, organizations, locations, dates, and so on. NER is important for applications that require understanding and extracting information from unstructured text, such as information retrieval, question answering, and information extraction.

Sentiment Analysis

Sentiment analysis, also known as opinion mining, is the task of determining the sentiment or emotions expressed in a piece of text. It involves classifying text as positive, negative, or neutral. Sentiment analysis has numerous practical applications, such as analyzing customer feedback, monitoring social media sentiment, and predicting stock market trends.

This image is property of Amazon.com.

Computer Vision

Introduction to Computer Vision

Computer Vision is a subfield of AI that focuses on enabling computers to understand and interpret visual information from digital images or videos. It involves tasks such as image recognition, object detection, image segmentation, and image captioning. Computer Vision has applications in various domains, including autonomous vehicles, surveillance systems, and medical imaging.

Object Detection

Object detection is the task of locating and classifying objects of interest in digital images or videos. It involves drawing bounding boxes around the objects and assigning them labels. Object detection algorithms typically use machine learning techniques, such as convolutional neural networks, to learn patterns in the visual data and make predictions on new, unseen images.

Image Segmentation

Image segmentation is the task of dividing a digital image into multiple segments or regions. Each segment represents a distinct object or region in the image. Image segmentation algorithms typically use techniques such as clustering, edge detection, and region growing to group pixels with similar characteristics together.

Image Captioning

Image captioning is the task of generating a textual description of the content of a digital image. It involves analyzing the visual information in the image and generating a coherent and descriptive caption that accurately represents the scene. Image captioning combines techniques from computer vision and natural language processing to generate human-readable descriptions.

Robotics and AI

Overview of Robotics and AI

Robotics is the branch of technology that deals with the design, construction, and operation of robots. AI plays a crucial role in robotics by enabling robots to perceive and understand their environment, make decisions, and interact with humans and other robots. The integration of AI and robotics has led to advancements in areas such as autonomous vehicles, industrial automation, and healthcare robotics.

Sensors and Perception

Sensors are critical components of robotic systems, providing robots with the ability to perceive and understand their environment. Sensors can capture data about the physical world, such as visual information, depth information, temperature, pressure, and more. Perception algorithms analyze this data and extract useful information that can be used for robot control and decision making.

Robot Control and Planning

Robot control involves the algorithms and techniques that enable robots to move and manipulate objects in their environment. Control systems can be based on feedback mechanisms that constantly monitor the state of the robot and adjust its actions accordingly. Planning algorithms determine the best sequence of actions for the robot to achieve a desired goal, taking into account factors such as obstacle avoidance, task complexity, and resource constraints.

Human-Robot Interaction

Human-Robot Interaction (HRI) focuses on the design and study of systems that enable effective communication and collaboration between humans and robots. HRI involves areas such as speech recognition and synthesis, gesture recognition, facial expression analysis, and social robot behavior. The goal is to create robots that can understand and respond to human commands, express emotions, and interact with humans in a natural and intuitive way.

This image is property of www2.deloitte.com.

Ethics and AI

Bias and Fairness

One of the key ethical concerns in AI is the potential for bias and unfairness in AI systems. Bias can arise from the data used to train AI models, which may reflect historical biases and prejudices. Unfairness can occur when AI systems disproportionately affect certain groups or individuals. Ensuring fairness and mitigating bias in AI systems is a crucial challenge that requires careful consideration and ethical guidelines.

Privacy and Security

As AI becomes more prevalent in our daily lives, privacy and security concerns become increasingly important. AI systems often require access to large amounts of personal data, raising concerns about data privacy and the potential for misuse or unauthorized access. Additionally, AI systems can be vulnerable to attacks or manipulations that can have significant consequences. Proper safeguards and regulations are necessary to protect individuals’ privacy and ensure the security of AI systems.

Automation and Job Displacement

The growing use of AI and automation technologies raises concerns about job displacement and the impact on the workforce. While AI has the potential to streamline processes and increase efficiency, it can also lead to the automation of jobs that were previously performed by humans. This can result in unemployment and economic disruption. Ensuring a smooth transition to an AI-driven economy and providing support for workers affected by automation is essential.

Accountability and Transparency

AI systems can make decisions that have significant consequences, making it crucial to ensure accountability and transparency in their operations. Understanding how AI systems make decisions and being able to trace the reasoning behind their actions is important for building trust and allowing for meaningful human oversight. Efforts are underway to develop frameworks and standards that promote accountability and transparency in AI systems.

Applications of AI

Healthcare

AI has the potential to revolutionize healthcare by improving patient care, diagnosis, and treatment outcomes. AI-powered systems can analyze patient data to detect patterns and make predictions, assist in complex medical imaging tasks, and support clinical decision-making. AI can also enhance telemedicine, personalized medicine, and drug discovery, leading to more effective and efficient healthcare delivery.

Finance

AI is transforming the financial industry by automating tasks, improving risk management, and enhancing customer service. AI-powered algorithms can analyze vast amounts of financial data in real-time to identify patterns, detect fraud, and generate investment recommendations. Chatbots and virtual assistants can provide personalized financial advice and support customer interactions. AI is also being used in algorithmic trading and credit scoring.

Transportation

AI is driving innovation in the transportation industry, with applications ranging from autonomous vehicles to traffic management systems. Self-driving cars are being developed that can navigate and interact with the environment without human intervention. AI algorithms can optimize routes, reduce congestion, and improve transportation efficiency. AI is also being used in logistics, fleet management, and ride-sharing services.

Entertainment

AI is reshaping the entertainment industry by enabling personalized experiences, content creation, and recommendation systems. AI algorithms can analyze user preferences and behavior to recommend movies, music, or books that are tailored to individual tastes. Virtual reality and augmented reality technologies are being enhanced by AI to create immersive and interactive entertainment experiences. AI is also being used in the gaming industry to create intelligent and realistic virtual characters.

In conclusion, artificial intelligence has come a long way since its early beginnings, with advancements in machine learning, deep learning, and neural networks driving the field forward. Applications of AI in natural language processing, computer vision, robotics, and various industries are becoming increasingly prevalent. While AI brings exciting possibilities, ethical considerations such as bias, privacy, job displacement, and accountability need to be addressed. The future of AI holds great potential to revolutionize various domains and improve our lives in many ways.

This image is property of miro.medium.com.